I wrote this in early 2019 to process my grief and confusion about the morning of October 27, 2018. It was originally published in Brisket, and I am re-publishing it on my blog for the one-year anniversary.

On Saturdays, Jews from Jerusalem to Jacksonville, Florida, gather for morning prayers. “Jew” is a broad category, encompassing strict observers of Judaism as well as completely secular but self-identified ethnic Jews. Not all Jews practice the religion of Judaism, and those who do may choose to practice rituals, rules, and traditions in very different ways. But some number of Jews throughout the world leave their homes on Shabbat morning, a day of rest set apart from the rest of the week, to pray together with fellow Jews.

This does not look the same everywhere. Some go to Orthodox synagogues and others attend Reform temples. Some meet in large buildings and others in rented rooms. Some are organized into a congregation, others are more informal. Some affiliate with a movement like Reconstructionist, Reform, Conservative, or Orthodox Judaism, and others simply call themselves a community. There are services where prayer is led in Hebrew, others that prefer English, and many that use both. Some congregations will not begin without a minyan, the quorum of ten adults needed to sanctify the ritual, while others begin with optimism that people will trickle in a few minutes late. But regardless of where it happens and what traditions a group does (or does not) follow, these Jews have come together on Shabbat to read the Old Testament from the Torah’s scrolls.

On Shabbat mornings, services at Congregation B’nai Israel in Gainesville, Florida, begin at 9:30 AM. The congregation rarely begins with a minyan, but a congregant will be there to lead shacharit, the morning prayer service, and they stand at the front of the sanctuary. They begin with the Mah Tovu prayer. “Mah tovu ohalecha yaakov, mishkenotechah yisrael,” they sing. “How lovely are your sanctuaries, people of Jacob, your study houses, descendants of Israel.” This prayer acknowledges the interconnected modes of revelation, the revelation that comes through study and learning and the revelation that is God. The congregation joins, singing in Hebrew, “Your great love inspires me to enter Your house, to worship in Your holy sanctuary, filled with awe for You.”

If you visit B’nai Israel today, you enter a serene lobby, but if you had visited the synagogue in the early 2000s, back when I was a teenager, you would have entered through a set of nondescript glass doors into a small foyer. Wall-to-wall blue carpet dominated the space. You could have passed through it in five strides—into the social hall if you walked straight forward and into the sanctuary if you went right—grabbing a kippah from the yarmulke holder built into the wall as you went along your way.

A few years ago the congregation decided to enlarge the entry space to make the synagogue more welcoming and give a better first impression. They pulled up the blue carpet and laid tile. They pushed out the frontage of the building so congregants could mill about after services and meals. The blonde stone that used to be the facade of the building but now serves as an interior wall glows in the Florida sunshine, brightening the space. It is inviting.

By the time the leader recites the Shema Yisrael—the central prayer of the service, and one of the most important prayers in Judaism for its affirmation of monotheism, which concisely declares, “Hear, O Israel: the LORD our God, the LORD is one”—a few more congregants will have passed through the entryway and into the sanctuary. They file into their preferred pews, pick up prayer books, and rifle through the pages to catch up with the service. The head of the ritual committee—who has been filling that role since before my bat mitzvah—sits in his regular spot, and six or seven regular attendees who happen to arrive on the early side that morning will dot the pews. The rabbi is there, or will be there soon. Some of them are parents who have first deposited their young kids in childcare. But most are older, in age and tenure in the synagogue.

On Shabbat morning, October 27, 2018, Jews gathered to worship in the holy spaces of a synagogue in Pittsburgh’s Squirrel Hill neighborhood. Three congregations—two Conservative and one Reconstructionist—held services together but apart in the same building. Early-arriving congregants walked from their homes or their parking spots up the inclining slopes of Wilkins Avenue or Shady Avenue, arriving with cheeks flushed by the exertion and brisk air. Crossing through the buildings entryway, members of New Light headed downstairs to the basement, while Tree of Life-Or L’Simcha gathered in the chapel and Dor Hadash’s Torah study service assembled in the sanctuary. Already by 9:45 AM, 75 people bustled within the building.

Not everyone was there early to pray—or solely to pray. Richard Gottfried and Daniel Stein, members of New Light, were in the kitchen preparing for a congregational breakfast. Dr. Jerry Rabinowitz, Daniel Leger, and another Dor Hadash member set up for the morning’s services as they did every Shabbat. With a bris scheduled, they put out a table in the foyer with glasses for wine and whiskey. Cecil and David Rosenthal were there arranging the siddurim and talitot—prayer books and prayer shawls—and greeting fellow Tree of Life members as they entered the synagogue’s doors. At promptly 9:45 AM, with just two other New Light congregants assembled, Melvin Wax began chanting the first words of shacharit: “Mah tovu ohalecha yaakov, mishkenotechah yisrael.”

Five minutes later, an armed man walked into Tree of Life’s foyer where Cecil Rosenthal stood, ready to welcome him to Tree of Life.

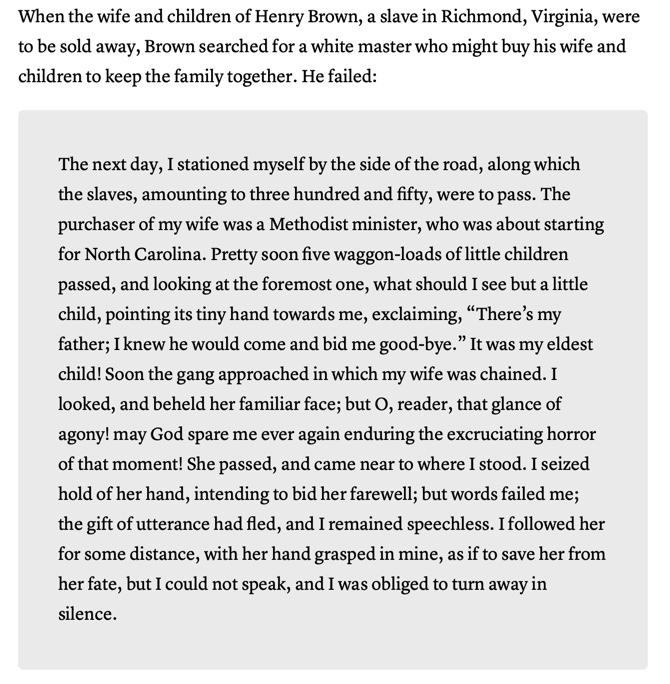

That was the morning I learned a new term: stochastic terrorism. This expression describes how mass communication can be used to motivate random actors to violence. [1] The violence itself is predictable, because it has been incited by public calls to take action, but no single violent action or event can be predicted. [2] For example, it was not random that a man with a gun decided to punish Jews on a Saturday morning in October because of their support for immigrants and refugees. The President tweeted on October 22 that “Mexico’s Police and Military are unable to stop the Caravan heading to the Southern Border of the United States. Criminals and unknown Middle Easterners are mixed in.” He described it as a “National Emerg[ency],” and three days later he deployed troops to the border. [3] So it was random that it was a man in Western Pennsylvania who packed up his car and drove to Pittsburgh on the 27th. But it could have been any number of gun-owning white nationalists fearing that immigrant criminals were infiltrating their country.

Just as the perpetrator was not entirely random, neither were the victims. It was not random that Jews were killed that Shabbat—it was random which Jews were killed. There are other synagogue buildings in Squirrel Hill, Pittsburgh, and the region that could have been targeted. And there are other congregants who could have, indeed might have, been in Tree of Life’s building that morning. A weekend with a bar or bat mitzvah, for example, would have increased attendance, even at that early hour.

There are, however, factors that even the stochastic nature of this violence would not have changed: the aging populations of Jewish congregations, and the burden of care that older members of communities overwhelmingly shoulder while younger members labor to meet the demands of late-stage capitalism. It is the loss of these communal caretakers, as much as the loss of life and the fear inculcated by terrorism, that made these murders a tragedy.

It was me who was in Pittsburgh on October 27, not my parents, but they were at greater risk than I was on that morning. They are regular synagogue attendees, not me. Had this stochastic event struck in North Central Florida instead of Pittsburgh, perhaps my parents would have been among the 11 killed.

If violence entered B’nai Israel at 9:50 AM and walked through the sunlit lobby, it would find at least 25 people in the building—fewer than 75, certainly, but for a single congregation and not three. Violence would find the handful of congregants who take turns leading the earliest part of the morning service, and the members who arrive early to make up the minyan. Violence would find the synagogue’s leadership, including several officers and board members as well as the rabbi and the executive director. The congregation’s leadership capacity—in prayer and governance—would be significantly depleted in just a few minutes. Someone new would have to step up to manage the relentless coordination of Torah and Haftorah readers and leaders for Shabbat services. There would be fewer people to ask to read and lead. Members would have to be elected to the Board, to serve without access to valuable wells of institutional memory.

This would be true at many Conservative or Reform synagogues in the United States. [4] Congregations are aging because the American Jewish community is older compared to the broader adult population; 26 percent of Jews are older than 65, compared to 19 percent nationally. (The exception to this demographic trend is in Orthodox communities, which have younger median ages as a result of higher birthrates). [5] In 2010, the FACT-Synagogue 3000 survey of 946 Conservative and Reform congregations found that “young people between the ages of 18 and 34 represent a scant 8 percent” of membership, compared to 24 percent aged 65 and older. [6] The latter figure is even more pronounced in smaller congregations (less than 250 families), where 30 percent of members are older than 65. Consequently, congregations are shrinking. The same survey found that “Saturday morning services in Conservative synagogues drew an average of 24 worshipers for every 100-member families. Friday evening services at Reform temples drew an average of 17 worshipers for every 100 families.” [8]

Although Pittsburgh is currently the 32nd largest Jewish community in the United States, with a population of 50,000, it is still subject to these same trends. [8] Congregations are merging, selling buildings, and consolidating resources. Tree of Life, the second-oldest congregation in Pittsburgh and one of the oldest synagogues in the United States, merged with upstart congregation Or L’Simcha in 2010 after several years of renting them space in their capacious building, which was constructed in the 1950s at a time when Tree of Life was a much larger congregation. This infused over 100 members into Tree of Life’s declining congregation, which was losing people to aging and to suburban synagogues. In the same year, Dor Hadash became a tenant in the building. New Light Congregation, like Tree of Life, was founded in the nineteenth century and experienced growth throughout the mid-twentieth century, but they too found themselves with a shrinking membership by the early twenty-first. In 2017 they also moved in with Tree of Life after selling their own building to Chabad’s rapidly expanding Yeshiva Schools, holding their services in the basement while the other two congregations used the main sanctuary and the chapel. [9]

Most synagogues could use an infusion of youth into their leadership. But there are structural reasons why these roles tend to be filled by older congregants. They are established in careers, or perhaps even retired. If they have children, they are older and more independent. They have time to volunteer to do the hard work of sustaining a community.

If ethnographic or sociological studies have been done of synagogue lay leadership, they are not easily found. When I asked Rabbi David Kaiman of B’nai Israel why he thinks synagogue leadership is dominated by older folks, he noted that the synagogue increasingly becomes a place for routine socializing as people age. As older adults age out of the social orbits around schools and workplaces, religious spaces continue to offer connection and engagement.

I do not bemoan the aging and shrinking of American congregations, because I do not see anything wrong with secular Jewish identity and because I am optimistic that these institutions will give way to new ways of practicing Judaism. But there is great anxiety within the Jewish community about their loss, and the question of how to attract younger Jews to synagogue has been the subject of much debate, experimentation, and investment. [10] Congregations have lowered their dues, renovated their spaces, revamped their programming, and placed greater emphasis on social justice—welcoming in Jews who previously felt excluded because of their class, race, or politics, or because they converted, or because they lack proficiency with Judaism or Hebrew. Synagogues have been so eager to invite people in and make their sanctuaries more welcoming—to Jews, of course, but also to communities of other faiths, immigrants, and other minority communities—that they inadvertently became a threat to white nationalism. With their lobbies and greeters and come-one-come-all enthusiasm, synagogues became targets, and vulnerable ones at that.

Had this stochastic event struck in North Central Florida on October 27, my parents likely would not have been in synagogue as early as 9:50 AM. If my mom is not leading shacharit or volunteering in the kitchen to help prepare the lunch that follows services, they tend to arrive around 10:15 AM, when the congregation begins to read from the Torah. Would that have spared my parents from being among the victims?

Possibly, but likely not. To read through the list of victims is to read through the archetypes that make up many American Jewish congregations. [11] Rose Mallinger, Melvin Wax, and the Rosenthal brothers attended synagogue every week without fail. Mallinger helped prepare breakfast for the congregation for many years. Joyce Feinberg and Irving Younger liked to greet fellow attendees as they entered the building on Shabbat mornings. A co-president of New Light, Stephen Cohen, told the New York Times that Wax, Daniel Stein, and Richard Gottfried were “the people who conducted our services, they did Torah readings.” [12] Every synagogue has their own Rose, Melvin, Cecil, David, Joyce, and Irving, as well as other archetypes. My brother, who spent two years working at a Conservative synagogue, claims that every congregation has “a Gary”—an older malcontent who complains about children. Rabbi Kaiman told me a story about his brother, who returned from visiting Conservative synagogues around the country and swore that every single one had a life insurance salesman responsible for getting together ten people for a minyan three times a week.

My parents are among “the regulars” at Shabbat services. My mother has served on the synagogue’s Board of Directors and is the long-time president of the Sisterhood. Their social lives revolve around B’nai Israel, and their closest friends are all fellow congregants. They are both in their 60s.

It was the day after the shooting, at the vigil held on Sunday afternoon, that I heard Rabbi Meyers of Tree of Life describe what he witnessed the previous morning. I stood outside the Soldiers and Sailors Memorial in the rain with the hundreds of other people who arrived too late to find a seat inside. We listened as the speeches were broadcast over loudspeakers. “There were 12 of us in the sanctuary at that time,” Rabbi Meyers said, “And as is customary in the Jewish faith—and I’ve also seen it in other faiths—all the early people come and sit in the back.” [13] The image he conjured with those words squeezed tight around my heart and shortened my breath and drew tears from my eyes. All of Tree of Life’s leaders, praying together, standing in a row.

Amidst all of the heterogeneity of global Jewry—our diverse rituals, traditions, politics, and spaces of worship—I was struck by this fundamental similarity. The older people come early and join their friends in the back row. I thought of my parents and their friends, sitting together in the same three back rows every week.

“The three people who died are the heart of our congregation,” Cohen told the Times. “It’s a stab in the heart.” [14] This is the underlying tragedy of these murders. Whether or not you believe in the preservation of organized religion, or the traditional American synagogue, these members did. They found immense value and comfort and community in belonging to their synagogues, and they helped create and sustain their congregations for others who felt similarly. Their murders meant a loss of invaluable human capital and of the capacity needed to maintain communities. And that’s the real tragedy at the heart of this story: their killer was more successful than he even realized.

Yet in the end he did not meet his objective. Today, Tree of Life, Dor Hadash, and New Light continue to meet every Shabbat. Two other local congregations with grand buildings host them in their chapels and classrooms. With painful resilience, their members have resumed Sisterhood and Men’s Club activities, planned Purim celebrations, held committee meetings, and strategized about their futures. In the coming months, each congregation will receive an allocation from the donations made in the wake of the tragedy--money I imagine they would rather not have, considering the circumstances, but that nonetheless will help sustain their operations. [15] Their endurance does not belie the tragedy—it is a refusal to let white nationalism win.

1. Thank you to my friend Phil Rocco, a political scientist and native Pittsburgher, for teaching me this term and thereby providing the framing for this essay

2. Jared Keller, “To Discuss the Pittsburgh Synagogue Shooting, We Have to Discuss Trump,” Pacific Standard, October 29, 2018.

3. Opheli Garcia Lawler, “What’s Important to Know About the Migrant Caravan,” The Cut, October 30, 2018.

4. It is difficult to find data on the Reconstructionist movement.

5. “Jewish Population in the US,” Steinhardt Social Research Institute at the Cohen Center for Modern Jewish Studies, Brandeis University. Consulted February, 2019.

6. Steven M. Cohen, Lawrence A. Hoffman, Jonathon Ament, and Ron Miller, “Conservative and Reform Congregations in the United States Today: Findings from the FACT-Synagogue 3000 Survey of 2010,” 2011.

7. S3K Synagogue Studies Institute, “Reform and Conservative Congregations: Different Strengths, Different Challenges,” S3K Report, March 2012.

8. As of 2018. Berman Jewish DataBank, “Pocket Demographics 2.0: US Jewish Population,” 2018.

9. Adam Reinherz, “Yeshiva Schools purchases former home of New Light Congregation,” Pittsburgh Jewish Chronicle, December 20, 2017.

10. For example: Rabbi Michael Knopf, “What’s Driving Jews Away from Synagogues? Not Dues, but ‘Membership,’” Haaretz, May 26, 2016; Ben Sales, “More synagogues are getting rid of their mandatory dues,” Jewish Telegraphic Agency, May 30, 2017.

11. Moriah Balingit, Kristine Phillips, Amy B. Wang, Deanna Paul, Wesley Lowery, and Kellie B. Gormley, “The Lives Lost in the Pittsburgh Synagogue Shooting,” The Washington Post, October 28, 2018.

12. Campbell Robertson, Sabrina Tavernise, and Sandra E. Garcia, “Quiet Day at Pittsburgh Synagogue Becomes a Battle to Survive,” The New York Times, October 28, 2018.

13. Rabbi Hazzan Jeffery Meyers, “Rabbi Hazzan Jeffery Meyers Addresses Pittsburgh Vigil," Cantors Assembly, October 30, 2018.

14. Robertson, Tavernise, and Garcia, “Quiet Day at Pittsburgh Synagogue Becomes a Battle to Survive.”

15. Jewish Federation of Greater Pittsburgh Victims of Terror Fund, “Independent Committee Report,” March 5, 2019.